A perceptual interface is one that allows a computer user to interact with the computer without having to use the normal keyboard and mouse. These interfaces are realised by giving the computer the capability of interpreting the user's movements or voice commands.

We are particularly concerned with using head orientation to control cursor movement, blinks to simulate mouse clicks, and gesture input.

For many people with physical disabilities, computers form an essential tool for communication, environmental control, education and entertainment. However access to the computer may be made more difficult by a person's disability.

We are attempting to determine head orientation by tracking three non-colinear facial features, e.g. the eyes and nose. Given that we know the features' relative positions when the user is looking straight at the screen, we can estimate the changes in the head orientation when we know the features' instantaneous locations. We may use this differential orientation to control the cursor.

Example results to follow.

We are active in two areas:

In this project we have been investigating methods of controlling the cursor position by head movements, specifically, by tracking the nose: if you look upwwards, then your nose moves upwards and the cursor will follow.

To achieve this, we must find the nostrils. This is made easier by first finding the face, then the nostrils are two nearby dark regions. We can find the face by locating face coloured pixels.

Face colour is influenced by ambient illuminatin and the amount of pigmentation in the skin. Fortunately, the variation in skin colour due to the differences in pigmentation is comparatively small, so if we can deal with the variations caused by illumination differences, we should be able to identify possible skin pixels.

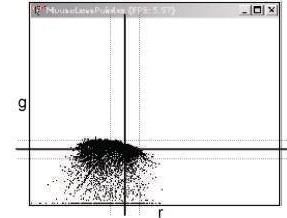

Several methods of normalising colour data have been suggested. The simplest is to make the sum of the red, green and blue components equal one. A slightly more complex method, but one that may give better results, is to use the log-opponent representation, as suggested by Fleck and Forsyth. We have chosed to use normalised red and green, as it is computationally cheaper.

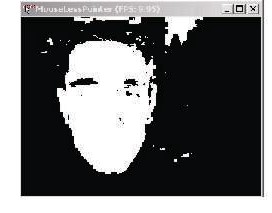

The following images show the intermediate results as the data is processed.

A typical head and shoulders input image.

The red-green histogram of this image and the mean and range of colours that correspond to the skin tones.

The input image thresholded according to the region indicated in the histogram. Whilst the face pixels have been found, so too have similar coloured pixels in the background. If we grow the connected regions of the image, the background regions are found to be smaller than the face region, we can therefore decide what is background and what is face.

The final image shows a bounding box superimposed on the input image. Also shown are two crosses indicating the locations of the nostrils. The average location is tracked and used to drive the cursor.

We have been able to achieve acceptible frame rates with modest hardware. The next stages of this research are to integrate this tracker with a regular operating system.

We are interested in replacing the mouse with a set of gestures. Specifically we want to be able to replace the point and click, and point and drag operations so that we can work on applications without having to use the mouse. As anyone who has seen the greasy fingermarks on the screens in an undergraduate laboratory knows, pointing at the screen is a very natural way of selecting or indicating something!

We want to be able to locate and track the fingertip in an image sequence. We also want to be able to guess what the user is indicating. This latter task implies that we will have to perform some analysis of the document that the user is processing.

Sample results to follow....

We also want to design systems that recognise and interpret gestures, and are capable or learning new gestures.

More information and example results to follow.

Last modified

March 2016