We present a novel approach for visual tracking of structured behaviour as observed

in human-computer interaction. An automatically acquired variable-length Markov

model is used to represent the high-level structure and temporal ordering of gestures.

Continuous estimation of hand posture is handled by combining the model

with annealed particle filtering. The stochastic simulation updates and automatically

switches between different model representations of hand posture that correspond

to distinct gestures. The implementation executes in real time and demonstrates

significant improvement in robustness over comparable methods.We provide

a measurement of user performance when our method is applied to a Fitts law dragand-

drop task, and an analysis of the effects of latency that it introduces.

For more details see:

N. Stefanov, A. Galata, R. Hubbold, Int. Journal of Computer Vision and Image Understanding (CVIU), accepted for publication subject to minor revision.

Nikolay Stefanov, Aphrodite Galata, Roger Hubbold, Real-time hand tracking with Variable-length Markov Models of behaviour, IEEE Int. Workshop on Vision for Human-Computer Interaction (V4HCI), in conjunction with CVPR 2005 ( .pdf ). Best Paper Award.

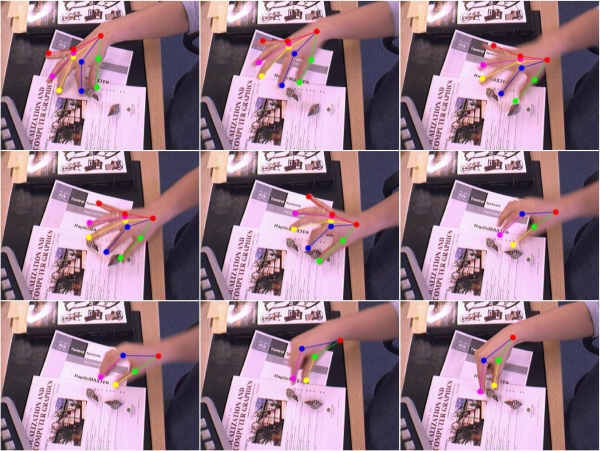

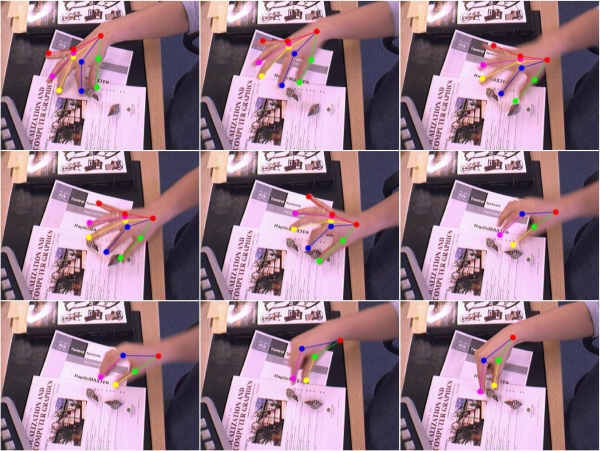

Figure 1: Tracking results demonstrating the handling of fast motion and

frequent gesture changes. Even though the video sequence exhibits a lot of

fast motion, tracking is successful due to the models knowledge of the

underlying behaviour.

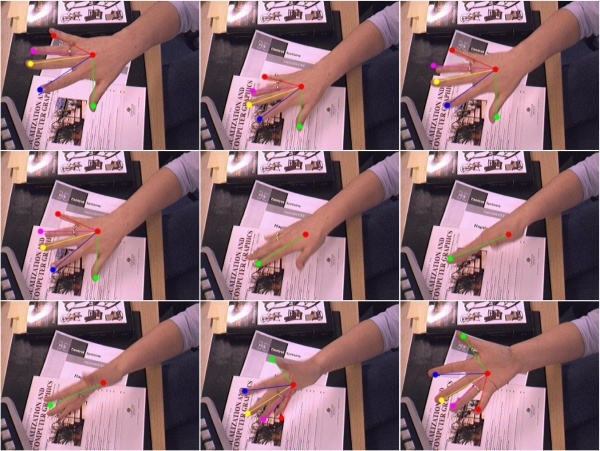

Figure 2: Tracking results demonstrating the handling of 3D rotation and

change of scale. We used 300 particles and three iterations for annealing.

Back to Aphrodite's Home Page